Earlier this month, Apple announced three new “Child Safety” initiatives:

First, new communication tools will enable parents to play a more informed role in helping their children navigate communication online. The Messages app will use on-device machine learning to warn about sensitive content, while keeping private communications unreadable by Apple.

Next, iOS and iPadOS will use new applications of cryptography to help limit the spread of CSAM online, while designing for user privacy. CSAM detection will help Apple provide valuable information to law enforcement on collections of CSAM in iCloud Photos.

Finally, updates to Siri and Search provide parents and children expanded information and help if they encounter unsafe situations. Siri and Search will also intervene when users try to search for CSAM-related topics.

These initiatives are intended to combat Child Sexual Abuse Material (CSAM) and is being done in a collaborative effort with the National Center for Missing and Exploited Children (NCMEC). These initiatives are planned to be rolled out with the next iOS version.

The first part of Apple’s plans allows parents to enable parental controls which can block nudity that is detected in iMessages on their children’s iPhones. When such a message is received and if the child is under 13, the content will be blocked, and the parents will get an alert about the message. If the child is between 13 and 18, the nudity will be blocked although it can be viewed if desired however the parents will not get an alert. One of the notorious challenges with iMessages currently is that there is no reporting function for messages, so if someone were to receive a death threat or unwanted messages, there is no way for the recipient to report it to Apple.

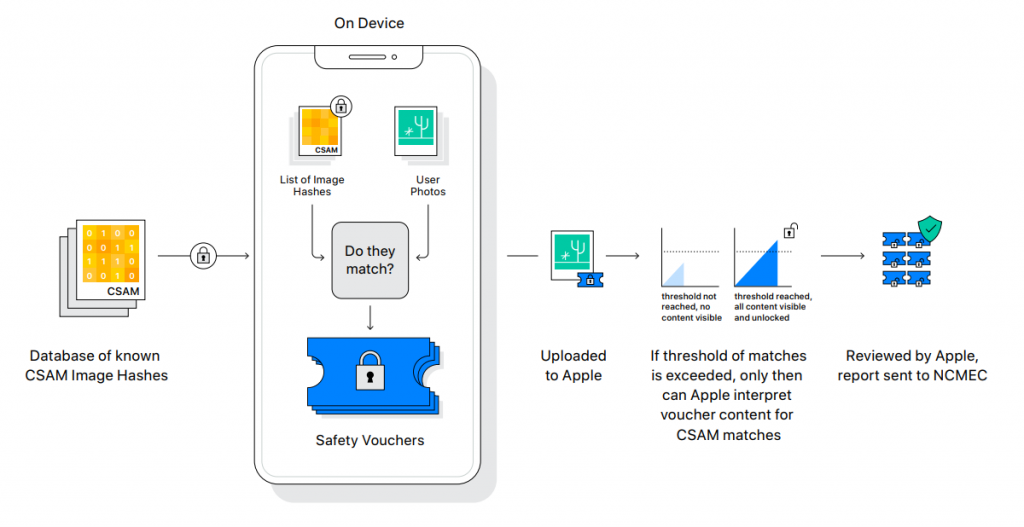

The second part of Apple’s initiatives involves the scanning of an iOS user’s images for Child Sexual Abuse Material (CSAM) by matching these images to hashes of known child pornography. This is achieved through a new system which Apple developed so that when an image matches a known hash, it is uploaded to Apple where it can be decrypted with a “Safety token” and be verified.

Ripe for Overreach

These measures may seem well-intentioned; however, these features effectively create a backdoor into devices that undermines fundamental privacy protections for all users of Apple products. This announcement has set off a firestorm of discussions around civil liberties and privacy from the security community and Apple’s own employees. The U.S. government can’t legally scan wide swaths of household equipment for contraband or make others do so, however Apple is doing it voluntarily, with potentially dire consequences. The EU, India and the UK are looking at creating legislation later this year to require technology providers to do content filtering of undesirable content. This action by Apple shows regulators that Apple has the technical means to do so and will likely lead to governments asking for more surveillance and censorship capabilities than they already have.

The Electronic Frontier Foundation has said that “Apple is opening the door to broader abuses”:

“It’s impossible to build a client-side scanning system that can only be used for sexually explicit images sent or received by children. As a consequence, even a well-intentioned effort to build such a system will break key promises of the messenger’s encryption itself and open the door to broader abuses […] That’s not a slippery slope; that’s a fully built system just waiting for external pressure to make the slightest change.”

The Center for Democracy and Technology has said the following:

“Apple is replacing its industry-standard end-to-end encrypted messaging system with an infrastructure for surveillance and censorship, which will be vulnerable to abuse and scope-creep not only in the U.S., but around the world,” says Greg Nojeim, Co-Director of CDT’s Security & Surveillance Project. “Apple should abandon these changes and restore its users’ faith in the security and integrity of their data on Apple devices and services.”

No matter how well-intentioned, @Apple is rolling out mass surveillance to the entire world with this. Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow.

— Edward Snowden (@Snowden) August 6, 2021

They turned a trillion dollars of devices into iNarcs—*without asking.* https://t.co/wIMWijIjJk

Currently content that is stored on iCloud does not use end to end encryption and is encrypted with keys that are controlled by Apple. Apple will decrypt this content for law enforcement on the receipt of a court issued subpoena. This action could be a preemptive move by Apple to move toward end-to-end encryption with a mechanism to provide child safety. However, police and other law enforcement agencies will often cite laws requiring “technical assistance” in investigating crimes, including the United Kingdom and Australia, to press Apple to expand this new capability.

National Security, Terrorism and CSAM are the ultimate strawman arguments of the 21st century, they are routinely cited as reasons why laws should be passed that infringe on our civil liberties and privacy. These issues should not be a trump card that governments can pull out to infringe on our privacy. The Snowden documents have demonstrated that well intentioned laws and the technological systems that enable them are often never used as intended and often leads to routine systematic abuse.

Authoritarian and totalitarian governments have been known to develop their own surveillance and censorship tooling in conjunction with procuring these capabilities from private companies. Research by The Citizen Lab has frequently demonstrated how governments have used this tooling for so called “lawful intercept” but in reality, they are used to spy and repress human right activists, journalists, and whistleblowers. Once sources have been identified, they are often held against their will and tortured.

False Accusations

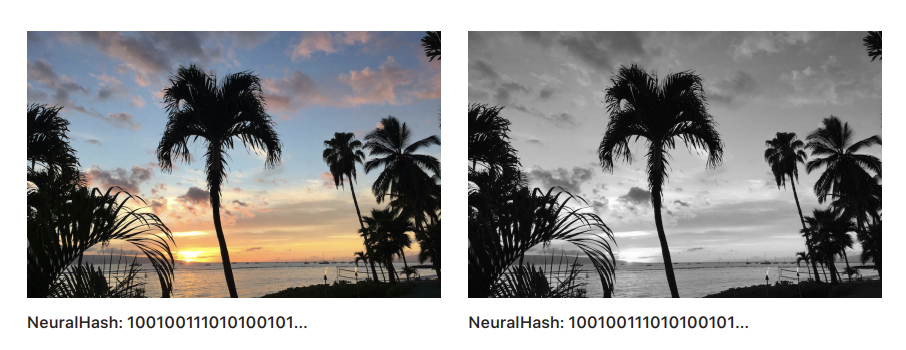

The hashes that Apple will use to enable this system are generated using a technology called NeuralHash as explained in Apple’s technical document:

NeuralHash is a perceptual hashing function that maps images to numbers. Perceptual hashing bases this number on features of the image instead of the precise values of pixels in the image. The system computes these hashes by using an embedding network to produce image descriptors and then converting those descriptors to integers using a Hyperplane LSH (Locality Sensitivity Hashing) process. This process ensures that different images produce different hashes.

The embedding network represents images as real-valued vectors and ensures that perceptually and semantically similar images have close descriptors in the sense of angular distance or cosine similarity. Perceptually and semantically different images have descriptors farther apart, which results in larger angular distances. The Hyperplane LSH process then converts descriptors to unique hash values as integers.

In the past, this matching was done by creating hashes (such as SHA256 & MD5) of files and then matching them to content. However, if an image was resized, re-encoded or changed slightly, it would result in different SHA256 and MD5 hashes. NeuralHash solves this issue by ensuring that identical and visually similar images result in the same hash. Apple claims that it has a false positive rate of one in a trillion, but how could this be tested as there is no CSAM data set is large enough for this to be tested on. While Apple may claim that that a hash collision is extremely unlikely, the lack of testing and transparency of this NeuralHash technology is a great concern to privacy advocates.

An important question to ask is “What happens when a false positive occurs?”

The initial hashes that Apple will be using come from the NCMEC, however there is no technical limitation to prevent a hash coming from another source. Images and videos containing CSAM that is in the possession of NCMEC that is being used to generate these hashes, were reported to them by law enforcement and 3rd parties such as online platforms that allow users to upload content. This includes forums, photo editing and social media sites to name a few. It is unclear how much actual CSAM is in the list of hashes since legitimate adult content can easily be misclassified as CSAM. This is especially true with the explosion of amateur content on adult entertainment websites and may not have the professional look about them that professional content may have had in the past. The use of filters, angles and photoshop can easily make a legal pornographic image appear to be CSAM.

Online platforms are required under US Law (U.S. Code Title 18 Part 1 Chapter 110 Section 2258A) to respond CSAM or face very significant fines:

18 USC § 2258A:

(a)Duty To Report.—(1)In general.—

(A) Duty.—In order to reduce the proliferation of online child sexual exploitation and to prevent the online sexual exploitation of children, a provider— (i) shall, as soon as reasonably possible after obtaining actual knowledge of any [CSAM], take the actions described in subparagraph (B); and (ii) may, after obtaining actual knowledge of any facts or circumstances [suggesting imminent child abuse], take the actions described in subparagraph (B).

(B)I Actions described.—The actions described in this subparagraph are— (i) providing to the CyberTipline of NCMEC [National Center for Missing & Exploited Children], or any successor to the CyberTipline operated by NCMEC, the mailing address, telephone number, facsimile number, electronic mailing address of, and individual point of contact for, such provider; and (ii) making a report of such facts or circumstances to the CyberTipline, or any successor to the CyberTipline operated by NCMEC.

This creates an incentive for all platforms to report all pornographic material to the NCMEC even though the material may not be CSAM. This has the potential to create a situation where misclassified legal images falsely accuse perpetrators. There is also the potential for trolls to abuse the reporting functionality of social platforms for investigative actions to take place, similar to how SWAT’ing takes place. If law enforcement were to execute a search warrant because of a CSAM notification and it turned out to be a false positive, the search alone can sow distrust with alleged perpetrator’s neighbors and community even though they are completely innocent.

This scenario can be further complicated if the search is reported by local media and can have lasting and devasting effect on the alleged perpetrator. In a world where information and misinformation are passed and shared extremely quickly, it is common that innocent people have had their lives ruined by misreporting by the media.

“Screeching Voices of the Minority”

After the initial announcement by Apple, there was an outcry amongst the security and privacy community over Apple’s actions. Over 8 500 people have signed an open letter to Apple asking that Apple cease the deployment of its content monitoring technology and issue a statement reaffirming their commitment to end-to-end encryption and to user privacy.

In the day following the announcement, a leaked internal memo sent by the NCMEC to Apple staff members said the following:

Team Apple,

I wanted to share a note of encouragement to say that everyone at NCMEC is SO PROUD of each of you and the incredible decisions you have made in the name of prioritizing child protection.

It’s been invigorating for our entire team to see (and play a small role in) what you unveiled today.

I know it’s been a long day and that many of you probably haven’t slept in 24 hours. We know that the days to come will be filled with the screeching voices of the minority.

Our voices will be louder.

Our commitment to lift up kids who have lived through the most unimaginable abuse and victimizations will be stronger.

During these long days and sleepless nights, I hope you take solace in knowing that because of you many thousands of sexually exploited victimized children will be rescued, and will get a chance at healing and the childhood they deserve. Thank you for finding a path forward for child protection while preserving privacy.

Apple has routinely participated in privacy grandstanding against its competitors and has taken out ads across multiple mediums reiterating their stance on privacy. Make no mistake, this capability will be used in the future by governments to further infringe on our civil liberties.

If critics and those who believe in privacy and civil liberties are going to be called the “screeching voices of the minority”, then I will never stop being a “screeching voice of the minority” and neither should you.

In fact, there are thousands of voices including privacy and security advocates who all agree that this is a bad idea by Apple. I would encourage you to sign the Electronic Frontier Foundation (EFF)’s petition to Apple which has more than 14 000 signatures already and to read the global coalition open letter to Apple which has been signed by 91 privacy, civil liberties and security organizations.